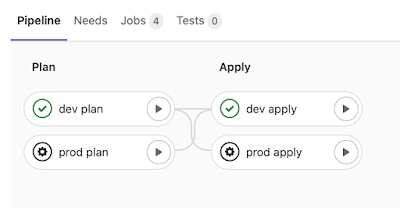

GitLab - terraform plan and apply

How do you apply changes in terraform ? In most cases you run terraform plan and then terraform apply and type yes . This approach works great on your local machine, but how to apply changes (and only the changes you want!) in GitLab job where you do not have access to shell? How to do that, when you cannot approve the output of apply command? You can use terraform apply -auto-approve , but it might be risky... No one likes to destroy something on production without a priori knowledge. So, can we run terraform plan , check the output and then run terraform apply in another step? We can, but still it might be risky operation. Why? Because plan and apply are separated operations! They know nothing about each other. So, apply can change something which was not showed in plan . But... according to Terraform Documentation : The optional -out argument can be used to save the generated plan to a file for later execution with terraform apply, which can be useful...